Yahoo Finance is a well-established website containing various fields of financial data like stock prices, financial news, and reports. It has its own Yahoo Finance API for extracting historic stock prices and market summary.

In this article, we will be scraping the original Yahoo Finance Website instead of relying on the API. The web scraping is achieved by an open-source web crawling framework called Scrapy.

Bulk Scraping Requirement?

Most of the popular websites use a firewall to block IPs with excessive traffic. In that case, you can use Zenscrape, which is a web scraping API that solves the problem of scraping at scale. In addition to the web scraping API, it also offers a residential proxy service, which gives access to the proxies itself and offers you maximum flexibility for your use case.

Web Scraper Requirements

Before we get down to the specifics, we must meet certain technical requirements:

- Python – We will be working in Python for this specific project. Its vast set of libraries and straightforward scripting makes it the best option for Web Scraping.

- Scrapy – This web-crawling framework supported by Python is one of the most useful techniques for extracting data from websites.

- HTML Basics – Scraping involves playing with HTML tags and attributes. However, if the reader is unaware of HTML basics, this website can be helpful.

- Web Browser – Commonly-used web browsers like Google Chrome and Mozilla Firefox have a provision of inspecting the underlying HTML data.

Installation and Setup of Scrapy

We will go over a quick installation process for Scrapy. Firstly, similar to other Python libraries, Scrapy is installed using pip.

pip install Scrapy

After the installation is complete, we need to create a project for our Web Scraper. We enter the directory where we wish to store the project and run:

scrapy startproject <PROJECT_NAME>

As seen in the above snippet of the terminal, Scrapy creates few files supporting the project. We will not go into the nitty-gritty details on each file present in the directory. Instead, we will aim at learning to create our first scraper using Scrapy.

In case, the reader has problems related to installation, the elaborate process is explained here.

Creating our first scraper using Scrapy

We create a python file within the spiders directory of the Scrapy project. One thing that must be kept in mind is that the Python Class must inherit the Scrapy.Spider class.

import scrapy

class yahooSpider(scrapy.Spider):

....

....

This follows the name and URLs of the crawler we are going to create.

class yahooSpider(scrapy.Spider):

# Name of the crawler

name = "yahoo"

# The URLs we will scrape one by one

start_urls = ["https://in.finance.yahoo.com/quote/MSFT?p=MSFT",

"https://in.finance.yahoo.com/quote/MSFT/key-statistics?p=MSFT",

"https://in.finance.yahoo.com/quote/MSFT/holders?p=MSFT"]

The stocks under consideration are those of Microsoft (MSFT). The scraper we are designing is going to retrieve important information from the following three webpages:

- Stocks Summary of the Microsoft Stocks

- Stock Statistics

- Microsoft Financials

The start_urls list contains the URL for each of the above webpages.

Parsing the scraped content

The URLs provided are scraped one by one and the HTML document is sent over to the parse() function.

import scrapy

import csv

class yahooSpider(scrapy.Spider):

# Name of the crawler

name = "yahoo"

# The URLs we will scrape one by one

start_urls = ["https://in.finance.yahoo.com/quote/MSFT?p=MSFT",

"https://in.finance.yahoo.com/quote/MSFT/key-statistics?p=MSFT",

"https://in.finance.yahoo.com/quote/MSFT/holders?p=MSFT"]

# Parsing function

def parse(self, response):

....

....

The parse() function would contain the logic behind the extraction of data from the Yahoo Finance Webpages.

Discovering tags for extracting relevant data

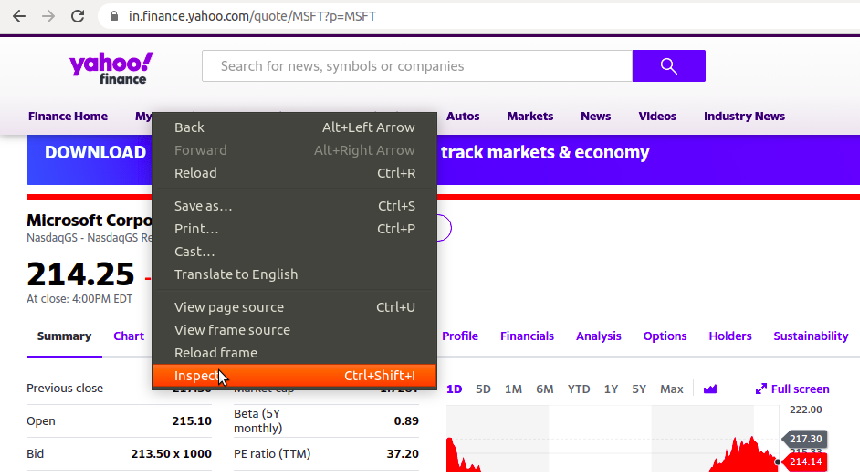

The discovery of tags from the HTML content is done via inspecting the webpage using the Web Browser.

After we press the Inspect button, a panel appears on the right-hand side of the screen containing a huge amount of HTML. Our job is to search for the name of tags and their attributes containing the data we want to extract.

For instance, if we want to extract values from the table containing “Previous Close”, we would need the names and attributes of tags storing the data.

Once we have the knowledge behind HTML tags storing the information of our interest, we can extract them using functions defined by Scrapy.

Scrapy Selectors for Data Extraction

The two selector functions we will use in this project are xpath() and css().

XPATH, independently, is a query language for selecting data from XML or HTML documents. XPATH stands for XML Path Language.

CSS, independently, is a styling language for HTML Language.

More information regarding these selector functions can be obtained from their official website.

# Parsing function

def parse(self, response):

# Using xpath to extract all the table rows

data = response.xpath('//div[@id="quote-summary"]/div/table/tbody/tr')

# If data is not empty

if data:

# Extracting all the text within HTML tags

values = data.css('*::text').getall()

The response value received as an argument contains the entire data within the website. As seen in the HTML document, the table is stored within a div tag having id attribute as quote-summary.

We cast the above information into an xpath function and extract all the tr tags within the specified div tag. Then, we obtain text from all the tags, irrespective of their name (*) into a list called values.

The set of values looks like the following:

['Previous close', '217.30', 'Open', '215.10', 'Bid', '213.50 x 1000', 'Ask', '213.60 x 800' ... 'Forward dividend & yield', '2.04 (0.88%)', 'Ex-dividend date', '19-Aug-2020', '1y target est', '228.22']

The one thing that must be duly noted is that the name and attribute of tags may change over time rendering the above code worthless. Therefore, the reader must understand the methodology of extracting such information.

It may happen that we might obtain irrelevant information from HTML document. Therefore, the programmer must implement proper sanity checks to correct such anomalies.

The complete code provided later in this article contains two more examples of obtaining important information from the sea of HTML jargon.

Writing the retrieved data into a CSV file

The final task of this project is storing the retrieved data into some kind of persistent storage like a CSV file. Python has a csv library for easier implementation of writing to a .csv file.

# Parsing function

def parse(self, response):

# Using xpath to extract all the table rows

data = response.xpath('//div[@id="quote-summary"]/div/table/tbody/tr')

# If data is not empty

if data:

# Extracting all the text within HTML tags

values = data.css('*::text').getall()

# CSV Filename

filename = 'quote.csv'

# If data to be written is not empty

if len(values) != 0:

# Open the CSV File

with open(filename, 'a+', newline='') as file:

# Writing in the CSV file

f = csv.writer(file)

for i in range(0, len(values[:24]), 2):

f.writerow([values[i], values[i+1]])

The above code opens a quote.csv file and writes the values obtained by the scraper using Python’s csv library.

Note: The

csvlibrary is not an in-built Python library and therefore requires installation. Users can install it by running –pip install csv.

Running the entire Scrapy project

After saving all the progress, we move over to the topmost directory of the project created initially and run:

scrapy crawler <CRAWLER-NAME>

In our case, we run scrapy crawler yahoo and the Python script scrapes and stores all the specified information into a CSV file.

Complete code of the Scraper

import scrapy

import csv

class yahooSpider(scrapy.Spider):

# Name of the crawler

name = "yahoo"

# The URLs we will scrape one by one

start_urls = ["https://in.finance.yahoo.com/quote/MSFT?p=MSFT",

"https://in.finance.yahoo.com/quote/MSFT/key-statistics?p=MSFT",

"https://in.finance.yahoo.com/quote/MSFT/holders?p=MSFT"]

# Parsing function

def parse(self, response):

# Using xpath to extract all the table rows

data = response.xpath('//div[@id="quote-summary"]/div/table/tbody/tr')

# If data is not empty

if data:

# Extracting all the text within HTML tags

values = data.css('*::text').getall()

# CSV Filename

filename = 'quote.csv'

# If data to be written is not empty

if len(values) != 0:

# Open the CSV File

with open(filename, 'a+', newline='') as file:

# Writing in the CSV file

f = csv.writer(file)

for i in range(0, len(values[:24]), 2):

f.writerow([values[i], values[i+1]])

# Using xpath to extract all the table rows

data = response.xpath('//section[@data-test="qsp-statistics"]//table/tbody/tr')

if data:

# Extracting all the table names

values = data.css('span::text').getall()

# Extracting all the table values

values1 = data.css('td::text').getall()

# Cleaning the received vales

values1 = [value for value in values1 if value != ' ' and (value[0] != '(' or value[-1] != ')')]

# Opening and writing in a CSV file

filename = 'stats.csv'

if len(values) != 0:

with open(filename, 'a+', newline='') as file:

f = csv.writer(file)

for i in range(9):

f.writerow([values[i], values1[i]])

# Using xpath to extract all the table rows

data = response.xpath('//div[@data-test="holder-summary"]//table')

if data:

# Extracting all the table names

values = data.css('span::text').getall()

# Extracting all the table values

values1 = data.css('td::text').getall()

# Opening and writing in a CSV file

filename = 'holders.csv'

if len(values) != 0:

with open(filename, 'a+', newline='') as file:

f = csv.writer(file)

for i in range(len(values)):

f.writerow([values[i], values1[i]])

Conclusion

The Scrapy Framework may not seem intuitive as compared to other scraping libraries but in-depth learning of Scrapy proves its advantages.

We hope this article helped the reader to understand Web Scraping using Scrapy. You may check out our another Web Scraping article that involves extracting of Amazon product details using Beautiful Soup.

Thanks for reading. Feel free to comment below for queries or suggestions.