Cross entropy is a differentiative measure between two different types of probability. Cross entropy is a term that helps us find out the difference or the similar relation between two probabilities. There are two different types of distributions in any model i.e. The predicted probability distribution and the actual distribution, or true distribution.

Cross entropy is also considered a loss function. This loss function is nothing but the difference between the predicted probability distribution and the actual distribution, or true distribution. Many machine learning models are based on predictions. In this case, the loss function plays a very important role in determining the accuracy and precision of the model based on actual results. In this article, we will discuss more about cross entropy and its functions.

Mathematical Representation of Cross Entropy

The mathematical representation of cross entropy between two different distributions is denoted by P and Q. The formula is H(P, Q) = – Σ [P(x) * log(Q(x))]. This formula contains different terms like, H(P, Q) that are cross entropy between the two different distributions. These distributions are represented in the form of P(x) and Q(x). This formula helps to find out the difference between the two different distributions.

Use of Cross Entropy in Python

Machine Learning and Deep Learning Models

In different machine learning and deep learning models, this cross-entropy is used as a loss function. The different models are based on the predictions. In the prediction of models, this cross-entropy plays a very important role.

Binary and Multiclass Classification Models

In classification models, the provided data is classified into different categories depending on their structure and function. The binary classification classifies the data into two different categories, and the multiclass classification classifies it into many categories. So, the classification is done using this model, which predicts the result. To find out the correctness of this classification model, we need this cross-entropy. In simple words, the loss function for this classification model helps us to understand whether these models are classifying data correctly or not.

Optimization of Neural Network

The cross-entropy i.e. loss function, is also used in neural networks for the optimization of the model. The prediction process and the backpropagation process use the loss function of cross entropy to calculate the difference between predictions and the actual parameters of the neural network. So, this eventually helps optimize the neural network.

SoftMax Function

The SoftMax function is a type of activation function used in the calculation of artificial neural network models. The SoftMax function always takes vector values as input and applies some mathematical operations to that. The output of the SoftMax function is a class probability. This class probability helps to find out the difference between the actual class probability and the predicted class probability. Let’s see the mathematical formula to understand the SoftMax function in more detail.

SoftMax(Z_i) = exponential(Z_i) / sum(exponential(Z_j)) for j = 1 to N

In this formula, (Z_i) is the input, which is a vector. Then in the formula, we divide the vector’s exponential by the sum of the exponentials of all the vectors. N is the total elements in a vector. These input elements are also called logits. Using this formula, we are converting this logit into probability.

Implementation of SoftMax Function Using Numpy

import numpy as np

def SoftMax(z):

e_z = np.exp(z - np.max(z))

return e_z / np.sum(e_z)

z = np.array([1.0, 2.0, 4.0])

output = SoftMax(z)

print(output)

In this example, we are using the numpy library to carry out the basic mathematical operations. The formula of the SoftMax function is applied to find out the probabilities. The vector of elements is provided to find out the probabilities. array() function from the numpy library is used for calculation. Let’s see the result.

You can see in the results that the probabilities for all the values are printed. This is how we can find out the difference between the actual class probabilities and the predicted class probabilities.

Cross Entropy Loss

The cross entropy loss is a loss function in Python. This loss function helps in classification problems like binary classification and multiclass classification problems. This loss function is applicable to any machine learning model that involves a classification problem. We need to apply one formula to calculate the cross entropy loss of the provided values. Let’s see the formula to calculate the cross entropy loss.

CELF = -1/N * sum(sum(Y_i * log(P_i)))

In this formula, CELF is a cross-entropy loss function. This formula involves different terms like (Y_i) which is the true class probability, and (P_i) which is considered the predicted class probability. Where, N is the total number of elements provided as a sample. We are actually calculating the sum of the multiplications of all the samples. At last, the average cross entropy loss is calculated.

Implementation of Cross Entropy Loss Using Numpy

The same mathematical formula can be implemented on machine learning models using the numpy library. Let’s see the code.

import numpy as np

def cross_entropy_loss_function(Y, P):

epsilon = 1e-10

loss = -np.mean(np.sum(Y * np.log(P + epsilon), axis=1))

return loss

In this code, the numpy library is used to implement the cross entropy loss function. We need to add the above piece of code to get the cross entropy loss. The Y and P are the actual class probability and predicted class probability, respectively. Here, the epsilon value is provided for maintaining numerical stability in the equation. The mean function from the numpy library is used to calculate the mean of all samples. This function returns a loss at the end. This way, we can solve classification problems.

Cross Entropy Loss Using PyTorch

What is PyTorch?

PyTorch is a framework where we can develop machine learning and deep learning models. This framework is developed by Facebook’s AI research lab and mainly uses Python language for development. The main advantage of using PyTorch is Graphics Processing Units(GPUs). This framework has a wide variety of tools and models where we can build optimization models, neural networks, machine learning, and deep learning models.

Automatic Differentiation and Optimization Techniques in PyTorch

While building a neural network model in Python, there is one step called backpropagation. In this backpropagation, we need to compute the gradients. The PyTorch provides automatic computation of gradients for input tensors. This is called automatic differentiation. This framework automatically decides the operations on tensors and provides results. The torch.optim model from PyTorch contains different optimization algorithms for various inputs. This model contains different algorithms like RMSprop, Stochastic Gradient Descent, Adagrad, and Adam.

Cross Entropy Loss Function Using PyTorch

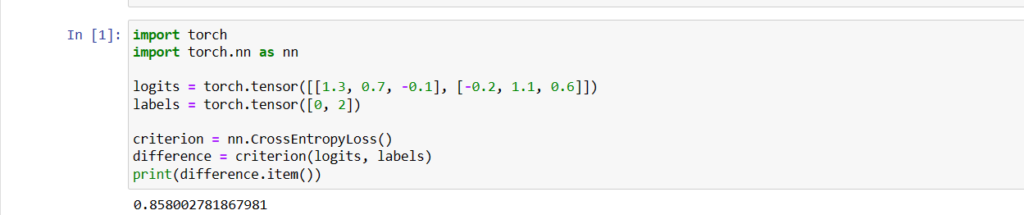

The multiclass classification problem can be solved in PyTorch using the cross entropy loss function. For this, we are using the torch.nn.CrossEntropyLoss class. The difference between the predicted class probabilities and the true class probability can be measured with the help of this class. Let’s see the implementation.

import torch

import torch.nn as nn

logits = torch.tensor([[1.3, 0.7, -0.1], [-0.2, 1.1, 0.6]])

labels = torch.tensor([0, 2])

criterion = nn.CrossEntropyLoss()

difference = criterion(logits, labels)

print(difference.item())

In this line of code, first, we have imported all the required modules, like torch and torch. nn. Then we provided true class and predicted class probabilities and applied the cross-entropy loss function to them. Here, you can see the difference printed at the end. Let’s see the result.

The difference between the true class probability and the predicted class probability is printed.

Difference Between Numpy Implementation and PyTorch Implementation

In both Numpy and PyTorch, we can perform the cross entropy function and calculate the loss function, but there are some differences in implementations and built-in functions. In Numpy, we need to manually add a function for cross-entropy in the code, as we did in the SoftMax function, but on the other hand, in PyTorch, there is automatic differentiation and a cross-entropy loss function for computation. So, we require less effort to build the models using PyTorch as compared to Numpy.

Benefits and Challenges of Using Cross Entropy in Different Models

cross-entropy is used in different models to solve problems like classification and optimization. Let’s see it in detail.

Benefits of Cross Entropy

Cross entropy is very suitable for classification problems and optimization problems. Using these cross entropies, we can find out the difference between true class probabilities and predicted class probabilities. This helps to correctly classify the samples into the correct classes. It improves the model’s accuracy and precision. Incorrect predictions are avoided due to these cross-entropy functions.

Challenges of Cross Entropy

Sometimes, the use of cross entropy for classification and optimization problems causes class imbalance and instability. In some examples, we provide epsilon to minimize this instability. Sometimes, this cross entropy function treats a slightly wrong prediction as a completely wrong prediction. So, this may cause issues while handling the samples and their probabilities.

Summary

In this article, we have learned the concept of cross-entropy in Python. Here, all topics like what is cross-entropy, the formula to calculate cross-entropy, SoftMax function, cross-entropy across-entropy using numpy, cross-entropy using PyTorch, and their differences are covered. The other points, such as the benefits and challenges of cross entropy, are also covered in this article. I hope you will enjoy.

References

Do read the official documentation on the PyTorch framework.