The Bag of Words Model is a very simple way of representing text data for a machine learning algorithm to understand. It has proven to be very effective in NLP problem domains like document classification.

In this article we will implement a BOW model using python.

Understanding the Bag of Words Model Model

Before implementing the Bag of Words Model, let’s just get an intuition about how it works.

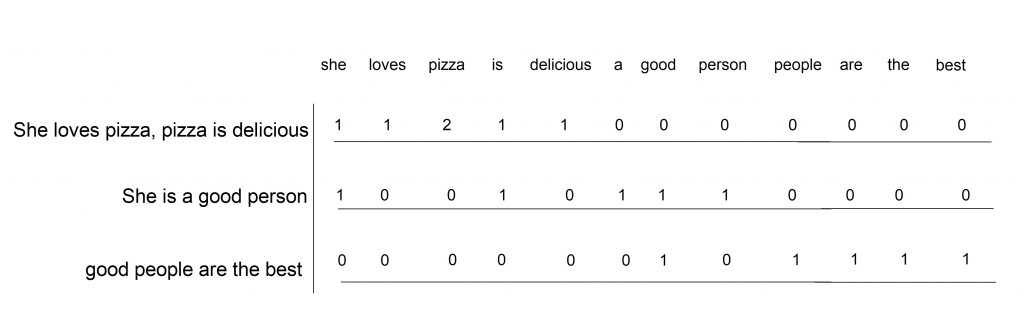

Consider the following text which we wish to represent in the form of vector using BOW model:

- She loves pizza, pizza is delicious.

- She is a good person.

- good people are the best.

Now we create a set of all the words in the given text.

set = {'she', 'loves', 'pizza', 'is', 'delicious', 'a', 'good', 'person', 'people', 'are', 'the', 'best'}

We have 12 different words in our text corpus. This will be the length of our vector.

Now we just have to count the frequency of words appearing in each document and the result we get is a Bag of Words representation of the sentences.

In the above figure, it is shown that we just keep count of the number of times each word is occurring in a sentence.

Implementing Bag of Words Model in Python

Let’s get down to putting the above concepts into code.

1. Preprocessing the data

Preprocessing the data and tokenizing the sentences. (we also transform words to lower case to avoid repetition of words)

#Importing the required modules

import numpy as np

from nltk.tokenize import word_tokenize

from collections import defaultdict

#Sample text corpus

data = ['She loves pizza, pizza is delicious.','She is a good person.','good people are the best.']

#clean the corpus.

sentences = []

vocab = []

for sent in data:

x = word_tokenize(sent)

sentence = [w.lower() for w in x if w.isalpha() ]

sentences.append(sentence)

for word in sentence:

if word not in vocab:

vocab.append(word)

#number of words in the vocab

len_vector = len(vocab)

2. Assign an index to the words

Create an index dictionary to assign unique index to each word

#Index dictionary to assign an index to each word in vocabulary

index_word = {}

i = 0

for word in vocab:

index_word[word] = i

i += 1

3. Define the Bag of Words model function

Finally defining the Bag of Words function to return a vector representation of our input sentence.

def bag_of_words(sent):

count_dict = defaultdict(int)

vec = np.zeros(len_vector)

for item in sent:

count_dict[item] += 1

for key,item in count_dict.items():

vec[index_word[key]] = item

return vec

4. Testing our model

With the complete implementation done, let’s test our model functionality.

vector = bag_of_words(sentences[0])

print(vector)

Limitations of Bag-of-Words

Even though the Bag of Words model is super simple to implement, it still has some shortcomings.

- Sparsity: BOW models create sparse vectors which increase space complexities and also makes it difficult for our prediction algorithm to learn.

- Meaning: The order of the sequence is not preserved in the BOW model hence the context and meaning of a sentence can be lost.

Conclusion

This article was all about understanding how the BOW model works and we implemented our own model from scratch using python. We also focused on the limitations of this model.

Happy Learning!