The to_parquet of the Pandas library is a method that reads a DataFrame and writes it to a parquet format.

Before learning more about the to_parquet method, let us dig deep into what a Parquet format is and why we should use Parquet.

What is Parquet?

Parquet is a storage format based on columnar storage of data. It is mainly used for big data processing as it can efficiently process and manipulate large, complex data.

So what is the use of columnar storage, you may ask? Well, when the data (complex and large) is stored in a column-wise structure rather than in rows, it allows for more efficient compression and encoding of the data.

When stored in a columnar structure, the data becomes easy to query. Storing data in the Parquet file might also reduce the internal input-output operations of your system and reduce complexity.

Also, unlike CSV, a parquet format supports nested data structures and is well-compatible with Apache Arrow tables.

Parquet files are self-describing, making them easy to work with using processing tools such as Apache Spark, Apache Impala, etc.

What is a Data Frame?

A data frame is a 2D table structure that stores values in rows and columns.

A data frame may have thousands of rows of data, of which only a few would be useful. Check out this post on how to filter a data frame.

A simple example of creating a data frame is given below.

The code for creating a data frame with the help of a dictionary is shown below.

import pandas as pd

data={

"Height":[165,158,163],

"Weight":[50,40,45]

}

df=pd.DataFrame(data)

print(df)

Let us take a quick look at the explanation.

In the first line, we import the Pandas library in our environment with its standard alias name-pd.

Next, we create a data dictionary with two keys: Height and Weight. The values corresponding to these keys are enclosed in square brackets[].

This dictionary is then converted to a Data Frame with the help of a method-pd.DataFrame(). It is stored in an object called df.

In the following line, we are printing this data frame using print().

Why Should a Data Frame Be Converted Into Parquet?

As you may know, a data frame is the most important and frequently used data structures of the Pandas library. Data frames are very powerful tools for data storage, not only in Python but also in R.

When we are talking about the Pandas Library, it is a must to check out this post on the data structures of Pandas. Pandas module tutorial

So why is there a need to convert such a strong storage tool to Parquet?

As we already discussed, parquet allows for adequate compression, which may not be possible with a data frame.

A data frame may not be the right choice to store huge amounts of data because querying might become messy with a data frame.

Also, when a data frame is converted to a Parquet format compatible with other tools like Apache and Julia, it will be easy for data exchange and even working with special tools unique to only these libraries.

Data security is one of the biggest reasons to convert a data frame to parquet.

Data is encoded in parquet format. So unless and until any decoding operations are performed on the file, the data is secured.

Prerequisites

Before writing a data frame to parquet, we must ensure that the necessary packages are installed in the system.

The necessary packages are:

- PyArrow

- fastparquet

PyArrow

PyArrow is also a Python library that works with larger and more complex datasets.

PyArrow provides fast, memory-efficient data structures and algorithms that can be used for various data processing tasks, such as reading and writing data to and from disk and performing data transformations.

It can also be used to work with distributions like Apache Spark.

Fastparquet

As the name suggests, fastparquet is a library designed especially to work with parquet files(reading and writing parquet formats).

It is designed to be fast and memory-efficient, allowing users to work with large datasets easily.

It can be integrated with other python libraries, such as PyArrow and Pandas to perform interconversions between different data structures—for example, Parquet to data frame or data frame to Parquet.

Here is how you can install the required packages.

!pip install pyarrow

!pip install fastparquet

How to Write a Data Frame to_parquet?

Once you have installed the packages, you can start working with the parquet file format.

The to_parquet takes a data frame as input and writes it to a parquet file.

The syntax for to_parquet is given below.

DataFrame.to_parquet(path, engine='auto', compression='snappy', index=None, partition_cols=None, **kwargs)

The important parameters are:

| Number | Name | Description | Default Value | Requirability |

| 1 | path | If the object is a string, it will be used as a Root Directory path when writing a partitioned dataset The path should be a file or a file-like object; which means it should support the write() method One of the engines- fastparquet does not support the file-like objectSo fastparquet engine mode should not be used when the path is file-like | str or file-like object | Required |

| 2 | engine | The type of parquet library to use The engine can be auto,pyarrow,fastparquetIf pyarrow is unavailable, the engine is changed to fastparquet | auto | Required |

| 3 | compression | Name of the compression to use If no compression is required(when the file size is small), go for NoneCan also be snappy,gzip,brotli | snappy | Required |

| 4 | index | This argument decides if the starting index of the data frame should be included in the result or not If index is True, the index(es) of the data frame is included in the outputIf index is False, the index(es) of the data frame is not included in the outputThere is another way of specifying the index, which is the RangeIndex which has three parameters;start,stop,step Start indicates the index with which the range should start, Stop gives the value at which we need to stop considering the values, and step acts like an increment or decrement operator Now the advantage of using this RangeIndex is that it is memory efficient; does not take much space | None | Required |

| 5 | partition_cols | This argument is used when we wish to partition the dataset It gives the column names by which the partition of the dataset should take place Columns are partitioned in the order they are given This argument must be None if the path is not a string | list, Default: None | Optional |

| 6 | **kwargs | Additional arguments are passed to the parquet library. | – | Required |

to_parquetIn this post, we are going to see the writing of a Data Frame to parquet, a special application of partition_cols, and comparison between the compression modes(gzip, snappy, and brotli).

Writing a Simple Data Frame to the Parquet Format

Let us see a simple example of writing a data frame to a parquet file.

First, as demonstrated above, we need to create a data frame.

The code is as follows:

import pandas as pd

# Create a sample DataFrame

df = pd.DataFrame({'A': [1, 2, 3,10], 'B': [4, 5, 6,11], 'C': [7, 8, 9,12], 'D':[13,14,15,16]})

print(df)

In the first line, we are importing the Pandas library, which is essential to work with data frames.

Next, we create a data frame with the help of a collection of lists and store it in a new variable called df.

Lastly, we are printing this data frame using the print().

The data frame is obtained as follows:

Now, we need to write this DataFrame to parquet.

This operation can be done using to_parquet.

The code is given below.

df.to_parquet('sample.parquet')

pd.read_parquet('sample.parquet')

In the first line, we are converting the data frame to parquet using to_parquet. The name of the parquet file is ‘sample. parquet’.

In the following line, we are using the pd.read_parquet method to read the parquet file.

The output is shown below.

As you may have seen from the output, a new file for the parquet format is created at the left end. If you use anaconda or jupyter, this file might be downloaded into your local storage area.

Partitioning the Parquet File

Although the partition_cols is an optional field in the syntax, it can be used to efficiently retrieve specific data which is used recursively.

This argument takes the parquet file and the columns which need to be partitioned and stores the partitioned parquet files in a path/directory.

We will take the same example we have seen earlier(sample.parquet) and implement a partition on this file.

This method is implemented as shown below:

df.to_parquet('/content/drive/MyDrive/parquetfile/sample.parquet',partition_cols=['A','B'],engine='fastparquet',compression='gzip')

To begin with, we need to obtain the location of the file we are going to use.

Next, we are going to use this location to partition the file.

As seen from the above snippet, we are specifying the location as a path, and the columns to be partitioned are columns ‘A’ and ‘B. We are using the fastparquet engine, and the compression mode is gzip.

We need to create a Directory for the partitioned files to be stored. You can access these partitioned files from this directory.

The partitioned(divided) files are stored in the path drive->MyDrive and the directory is parquetfile.

The partitioned files are supposed to look like this.

This partition of the files might come in handy when you are repeatedly using the same data or a part of the data.. Instead of accessing the whole data file, you can retrieve what you are looking for if the parquet file is partitioned.

The Time taken by gzip compression

We are first going to check the time taken with gzip.

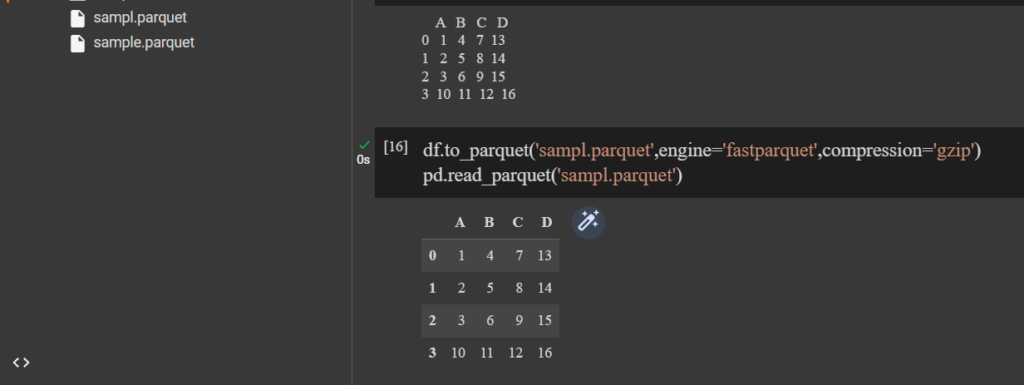

We are going to take the same example of converting the data frame to parquet but use the gzip compression.

Consider the code given below:

df.to_parquet('sampl.parquet',engine='fastparquet',compression='gzip')

pd.read_parquet('sampl.parquet')

The output is a parquet file, as shown below.

Let us check the time taken.

%timeit df.to_parquet('sampl.parquet',engine='fastparquet',compression='gzip')

When the compression mode is gzip, we check the time to create a ‘sampl.parquet’ file.

The %timeit module, also called a magic module of Python, is used to check the time to execute a single line code.

And here is the output for the above code.

Time taken by snappy compression

Let us check the time taken to create the parquet file using snappy compression.

The code is given below.

%timeit df.to_parquet('sampl.parquet',engine='fastparquet',compression='snappy')

We are checking the time taken to create a file called ‘sampl. parquet’ when the compression mode is snappy. The %timeit module of python is used for this task.

The time taken to create the parquet file using the snappy compression is shown below.

Time taken by brotli compression

The last compression type is brotli.

%timeit df.to_parquet('sampl.parquet',engine='fastparquet',compression='brotli')

Creating a parquet file with brotli compression takes a bit longer than the other two compression modes.

Here is the comparison between three compression types for reference.

As you can see, the time taken is short when the compression mode is snappy, which is just 3.98 milliseconds. Since it is faster and more efficient, we mostly use snappy compression as default.

Conclusion

To summarize, we have seen the Parquet file format and its uses.

We learned what a data frame is and why there is a need to convert the data frame to Parquet format.

We have seen how to create a data frame from a dictionary and the important packages required to write a data frame to parquet and their installation.

Next, we have seen the syntax of the to_parquet() method and a detailed explanation of its arguments.

Coming to the examples, firstly, we have seen how to create a data frame from lists and then convert this data frame to a parquet file.

We have also seen how, after conversion, a new parquet file is created in the local storage area.

Next, we have seen the application of partition_cols, which is used to create a partitioned file segment that might help easily retrieve frequently used data files.

Lastly, we have seen the comparison between the three compression modes(snappy,gzip,brotli) and we understood why snappy is used as the default compression mode. It is so because snappy compression takes less time to create a parquet file out of a more efficient data frame.

References

You can find more about this method here

Learn more about the timeit module and how to measure the execution of small code snippets

See how to use the timeit module from this stack overflow answer chain