- Python yield keyword is used to create a generator function.

- The yield keyword can be used only inside a function body.

- When a function contains yield expression, it automatically becomes a generator function.

- The generator function returns an Iterator known as a generator.

- The generator controls the execution of the generator function.

- When generator next() is called for the first time, the generator function starts its execution.

- When the next() method is called for the generator, it executes the generator function to get the next value. The function is executed from where it has left off and doesn’t execute the complete function code.

- The generator internally maintains the current state of the function and its variables, so that the next value is retrieved properly.

- Generally, we use for-loop to extract all the values from the generator function and then process them one by one.

- The generator function is beneficial when the function returns a huge amount of data. We can use the yield expression to get only a limited set of data, then process it and then get the next set of data.

Python yield vs return

- The return statement returns the value from the function and then the function terminates. The yield expression converts the function into a generator to return values one by one.

- Python return statement is not suitable when we have to return a large amount of data. In this case, yield expression is useful to return only part of the data and save memory.

Python yield Example

Let’s say we have a function that returns a list of random numbers.

from random import randint

def get_random_ints(count, begin, end):

print("get_random_ints start")

list_numbers = []

for x in range(0, count):

list_numbers.append(randint(begin, end))

print("get_random_ints end")

return list_numbers

print(type(get_random_ints))

nums = get_random_ints(10, 0, 100)

print(nums)

Output:

<class 'function'>

get_random_ints start

get_random_ints end

[4, 84, 27, 95, 76, 82, 73, 97, 19, 90]

It works great when the “count” value is not too large. If we specify count as 100000, then our function will use a lot of memory to store that many values in the list.

In that case, using yield keyword to create a generator function is beneficial. Let’s convert the function to a generator function and use the generator iterator to retrieve values one by one.

def get_random_ints(count, begin, end):

print("get_random_ints start")

for x in range(0, count):

yield randint(begin, end)

print("get_random_ints end")

nums_generator = get_random_ints(10, 0, 100)

print(type(nums_generator))

for i in nums_generator:

print(i)

Output:

<class 'generator'>

get_random_ints start

70

15

86

8

79

36

37

79

40

78

get_random_ints end

- Notice that the type of nums_generator is generator.

- The first print statement is executed only once when the first element is retrieved from the generator.

- Once, all the items are yielded from the generator function, the remaining code in the generator function is executed. That’s why the second print statement is getting printed only once and at the end of the for loop.

Python Generator Function Real World Example

One of the most popular example of using the generator function is to read a large text file. For this example, I have created two python scripts.

- The first script reads all the file lines into a list and then return it. Then we are printing all the lines to the console.

- The second script use yield keyword to read one line at a time and return it to the caller. Then it’s getting printed to the console.

I am using Python resource module to print the memory and time usage of both the scripts.

read_file.py

import resource

import sys

def read_file(file_name):

text_file = open(file_name, 'r')

line_list = text_file.readlines()

text_file.close()

return line_list

file_lines = read_file(sys.argv[1])

print(type(file_lines))

print(len(file_lines))

for line in file_lines:

print(line)

print('Peak Memory Usage =', resource.getrusage(resource.RUSAGE_SELF).ru_maxrss)

print('User Mode Time =', resource.getrusage(resource.RUSAGE_SELF).ru_utime)

print('System Mode Time =', resource.getrusage(resource.RUSAGE_SELF).ru_stime)

read_file_yield.py

import resource

import sys

def read_file_yield(file_name):

text_file = open(file_name, 'r')

while True:

line_data = text_file.readline()

if not line_data:

text_file.close()

break

yield line_data

file_data = read_file_yield(sys.argv[1])

print(type(file_data))

for l in file_data:

print(l)

print('Peak Memory Usage =', resource.getrusage(resource.RUSAGE_SELF).ru_maxrss)

print('User Mode Time =', resource.getrusage(resource.RUSAGE_SELF).ru_utime)

print('System Mode Time =', resource.getrusage(resource.RUSAGE_SELF).ru_stime)

I have four text files of different sizes.

~ du -sh abc.txt abcd.txt abcde.txt abcdef.txt

4.0K abc.txt

324K abcd.txt

26M abcde.txt

263M abcdef.txt

~

Here are the stats when I am running both the scripts for different files.

~ python3.7 read_file.py abc.txt

Peak Memory Usage = 5558272

User Mode Time = 0.014006

System Mode Time = 0.008631999999999999

~ python3.7 read_file.py abcd.txt

Peak Memory Usage = 10469376

User Mode Time = 0.202557

System Mode Time = 0.076196

~ python3.7 read_file.py abcde.txt

Peak Memory Usage = 411889664

User Mode Time = 19.722828

System Mode Time = 7.307018

~ python3.7 read_file.py abcdef.txt

Peak Memory Usage = 3917922304

User Mode Time = 200.776204

System Mode Time = 72.781552

~ python3.7 read_file_yield.py abc.txt

Peak Memory Usage = 5689344

User Mode Time = 0.01639

System Mode Time = 0.010232999999999999

~ python3.7 read_file_yield.py abcd.txt

Peak Memory Usage = 5648384

User Mode Time = 0.233267

System Mode Time = 0.082106

~ python3.7 read_file_yield.py abcde.txt

Peak Memory Usage = 5783552

User Mode Time = 22.149525

System Mode Time = 7.461281

~ python3.7 read_file_yield.py abcdef.txt

Peak Memory Usage = 5816320

User Mode Time = 218.961491

System Mode Time = 74.030242

Here is the data in the tabular format for better understanding.

| File Size | Return Statement | Generator Function |

|---|---|---|

| 4 KB | Memory: 5.3 MB, Time: 0.023s | Memory: 5.42 MB, Time: 0.027s |

| 324 KB | Memory: 9.98 MB, Time: 0.028s | Memory: 5.37 MB, Time: 0.32s |

| 26 MB | Memory: 392.8 MB, Time: 27.03s | Memory: 5.52 MB, Time: 29.61s |

| 263 MB | Memory: 3.65 GB, Time: 273.56s | Memory: 5.55 MB, Time: 292.99s |

So the generator function is taking slightly extra time than the return statement. It’s obvious because it has to keep track of the function state in every iterator next() call.

But, with the yield keyword the memory benefits are enormous. The memory usage is directly proportional to the file size with the return statement. It’s almost constant with the generator function.

Note: The example here is to show the benefits of using yield keyword when the function is producing large amount of data. The Python file already has a built-in function readline() for reading file data line by line, which is memory efficient, fast and simple to use.

Python yield send Example

In the previous examples, the generator function is sending values to the caller. We can also send values to the generator function using the send() function.

When send() function is called to start the generator, it must be called with None as the argument, because there is no yield expression that could receive the value. Otherwise, we will get TypeError: can’t send non-None value to a just-started generator.

def processor():

while True:

value = yield

print(f'Processing {value}')

data_processor = processor()

print(type(data_processor))

data_processor.send(None)

for x in range(1, 5):

data_processor.send(x)

Output:

<class 'generator'>

Processing 1

Processing 2

Processing 3

Processing 4

Python yield from Example

The “yield from expression” is used to create a sub-iterator from given expression. All the values produced by the sub-iterator is passed directly to the caller program. Let’s say we want to create a wrapper for the get_random_ints() function.

def get_random_ints(count, begin, end):

print("get_random_ints start")

for x in range(0, count):

yield randint(begin, end)

print("get_random_ints end")

def generate_ints(gen):

for x in gen:

yield x

We can use the “yield from” in the generate_ints() function to create a bi-directional connection between the caller program and the sub-iterator.

def generate_ints(gen):

yield from gen

The actual benefit of “yield from” is visible when we have to send data to the generator function. Let’s look at an example where the generator function receives data from the caller and send it to the sub-iterator to process it.

def printer():

while True:

data = yield

print("Processing", data)

def printer_wrapper(gen):

# Below code to avoid TypeError: can't send non-None value to a just-started generator

gen.send(None)

while True:

x = yield

gen.send(x)

pr = printer_wrapper(printer())

# Below code to avoid TypeError: can't send non-None value to a just-started generator

pr.send(None)

for x in range(1, 5):

pr.send(x)

Output:

Processing 1

Processing 2

Processing 3

Processing 4

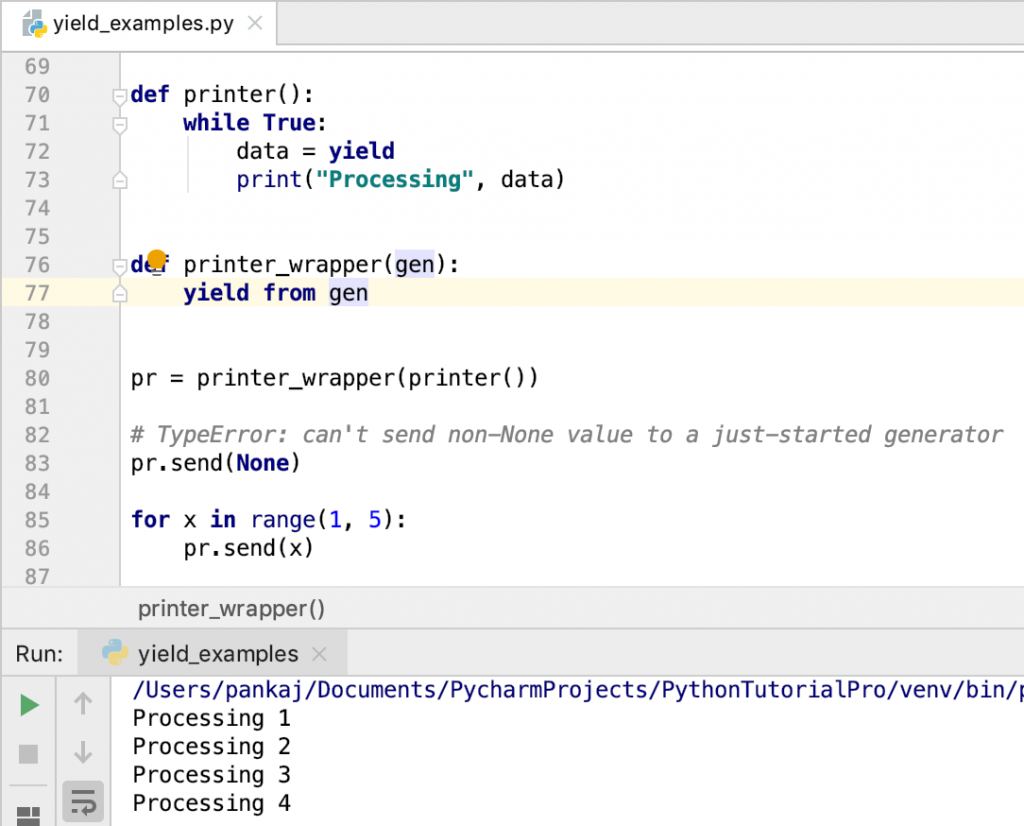

That’s a lot of code to create the wrapper function. We can simply use the “yield from” here to create the wrapper function and the result will remain the same.

def printer_wrapper(gen):

yield from gen

Conclusion

Python yield keyword creates a generator function. It’s useful when the function returns a large amount of data by splitting it into multiple chunks. We can also send values to the generator using its send() function. The “yield from” statement is used to create a sub-iterator from the generator function.